How to Downplay Your Coding Disaster

Image: US Airforce

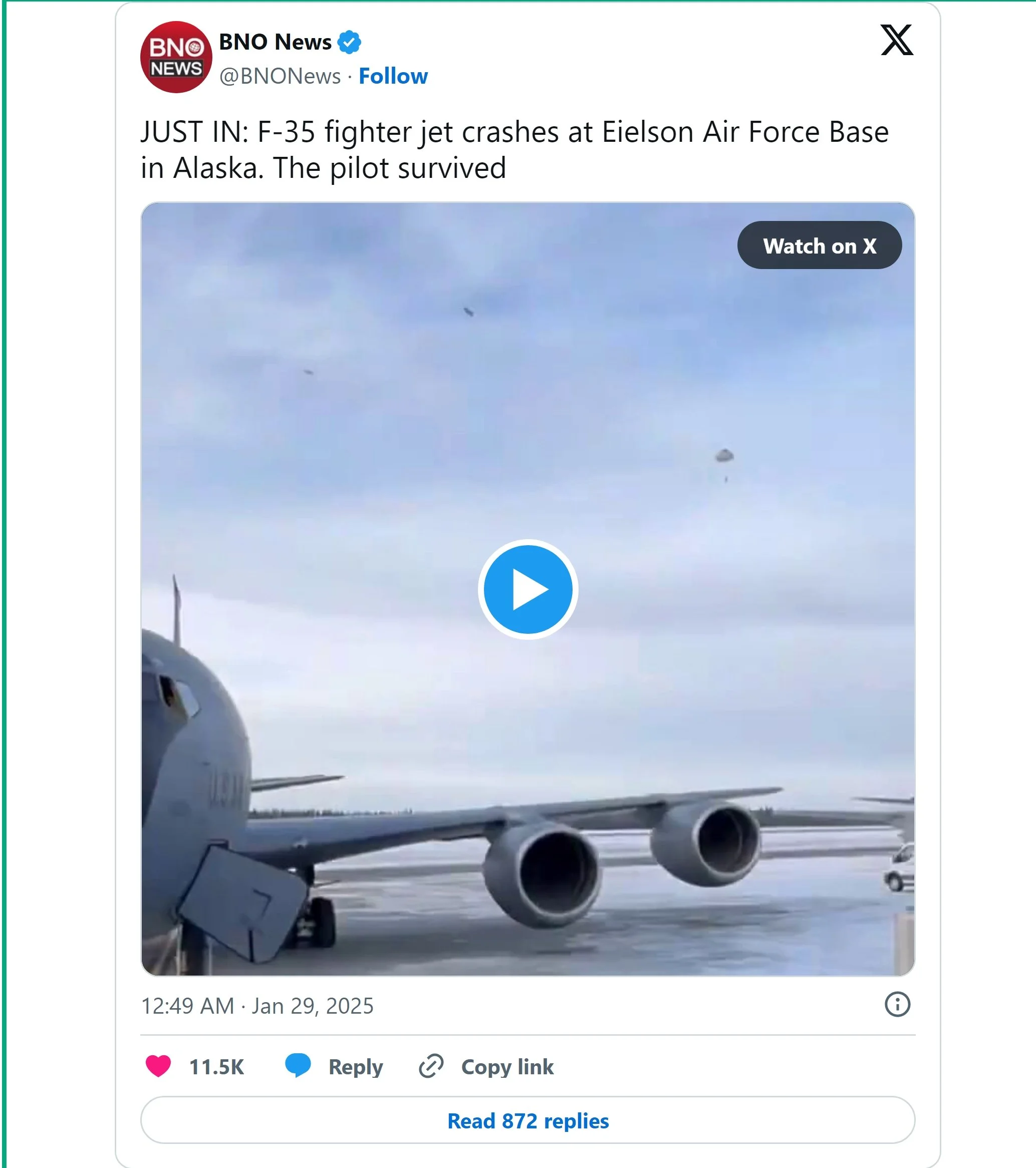

Yesterday an F-35 fighter jet crashed in Alaska suffering “significant damage”. Don’t worry, the pilot survived and you can see them parachuting out of the stricken aircraft in the following video.

The thing is. That significant damage quote. It reminds me of a few things that have been said on my recent software development projects, since that plane is clearly “beyond repair”.

The Tech Equivalent to Significant Damage

I’m going to say it. That bird was totalled.

The way it was described, “significant damage” really underplays what happened here. The US military aren’t the only masters of understatement though, software engineers have been in on the act for some time too.

Here are some of the classics.

“It’s just a minor bug.” (Cue production outage)

“The rollback went fine.” (So why is the database empty?)

“We followed best practices.” (The ones that got us here?)

“We’ll be fine with this timeline.” (Those famous last words)

I’m sure the witty lot who read this blog will come up with some more in the comments section, and I’d love to read them. It’s become part of the game, downplaying the disasters and although it’s not pleasant that is part of the game of software development.

The Art of Downplaying Disaster

The tech industry, much like the military, has perfected the art of making catastrophic failure sound like a minor inconvenience. The F-35 crash wasn’t a total loss, just significant damage.

That’s the same energy your manager brings when they tell you “There’s just a slight issue with the last deployment”, only for you to discover that the live service is down, users are fuming, and the CTO is on a warpath.

When a software release goes sideways, you can expect the following action plan to spring into action.

A Crisis Meeting That Solves Nothing

I don’t know if you’ve been in a meeting like this. I remember when the payment systems went down at one of my old employers. The panic was real, the pained expression on people's faces.

While we sat in the meeting (that stopped engineers solving the problem) the following was said. Here’s a summary of what happened, and what was never really drawn out in our post-mortem.

The Fun and Games

“We need to align on this” = Nobody has a clue what’s going on.

“What’s the ETA on the fix?” = We don’t have one, but let’s pretend we do.

“Let’s add a retrospective to the backlog” = A postmortem nobody will read.

The Engineer Who Saw It Coming

This never actually got said, but perhaps I should have piped up with this:

“I did mention this in Slack last week…”

The Official Damage Control Statement

“It was an edge case.” (It wasn’t.)

“We’ll improve our processes.” (We won’t. We didn’t.)

“We’re learning from this.” (We’ll repeat it in six months, as we certainly haven’t.)

Crash and Learn?

The best part of working in software development is that, much like the military, we never really learn from our mistakes. We rename them, we add more process, and then we do them all over again.

That F-35? It’ll be replaced with another one. That failed deployment. It’ll happen again next quarter.

Conclusion

When the inevitable happens, the obvious reaction to the disaster happens. Someone, somewhere, comes out of the woodwork with a straight face and says:

“Things were bad. We’ve gone through the root cause analysis, and we’re taking steps to ensure this never happens again.”

Sure you are. Just like last time.